Scientists have created a decoder that converts brain activity into speech

Researchers have developed a device that can “read thoughts” and convert brain signals into synthesized speech. The technology is not yet accurate enough for use outside the lab, but in the future it could restore the ability to speak to people after brain injuries, strokes or those suffering from neurodegenerative diseases.

Over the years, the researchers probowali to create a system thatory can generate synthetic speech using the activity of mofromgu. Syndromeoł scientistsow from the University of California at San Francisco has finally done so. Although the technology still needs to be refined, one day it may restore theocialize the ability to communicate to people whoore lost the ability to moriences as a result of traumaow mozgu or chorob neurodegenerative diseases, such as Parkinson’s disease or amyotrophic lateral sclerosis, on ktore suffered by the famous physicist Stephen Hawking.

The new interface mozg-computer can generate natural-sounding speech by decoding electrical impulses mozgu, whichore responsible for coordinating the movement of the mouth, jaw, tongue and larynx during speech. A description of the newly developed system appeared in the pages of the journal „Nature”.

Someore people with severe speech impairments learn to express their thoughts letter by letter using assistive devices thatore track very small movements of the eyes or facial muscles. However, creating text or synthesized speech using such devices is tedious, error-prone and painfully slow. It usually allows the expression of a maximum of 10 wordsoin per minute, in porown to 100-150 wordsow per minute while using natural speech.

A new system being developed in Edward Chang’s lab shows that it is possible to create a synthesized version of a person’s voice, whoora for some reason cannot mowić. The system uses the activity of the centroin speech in mozgu. In the future, this approach may not only restoreoThe system uses the activity of the centers to ensure smooth communication with people whoore have been deprived of it, but roalso can reproduce a voice timbre that conveys the emotions and personality of the mowcy.

– Our research shows that we can generate entire sentences based on the activity of moof a person’s trauma,” said Chang. – This is an exciting proofod to the fact that thanks to the technology, whichora is already in hand, we should be able to build a device thatore would change the life of a patientow with speech loss – added.

Dr. Gopala Anumanchipalli, who led the study, along with Josh Chartier, built on recent work in which theyorych has described how speech centers in the human mozgu control the movements of the lips, jaw, tongue and other elements of theoin voice tract to produce fluent speech.

The researchers realized that the earlier proto directly decode speech from m activityozgu may have met with limited success because these regions of the mozgu do not directly represent the acoustic properties of soundoin speech, but rather the instructions needed to coordinate movementoin the mouth and throat during speech.

– The relationship between vocal tract movements and the speech sounds produced is complex, Anumanchipalli said. – We reasoned that if the speech centers in the mozgu encode movements rather than sounds, we should sprob to do the same in decoding these signalsow -added.

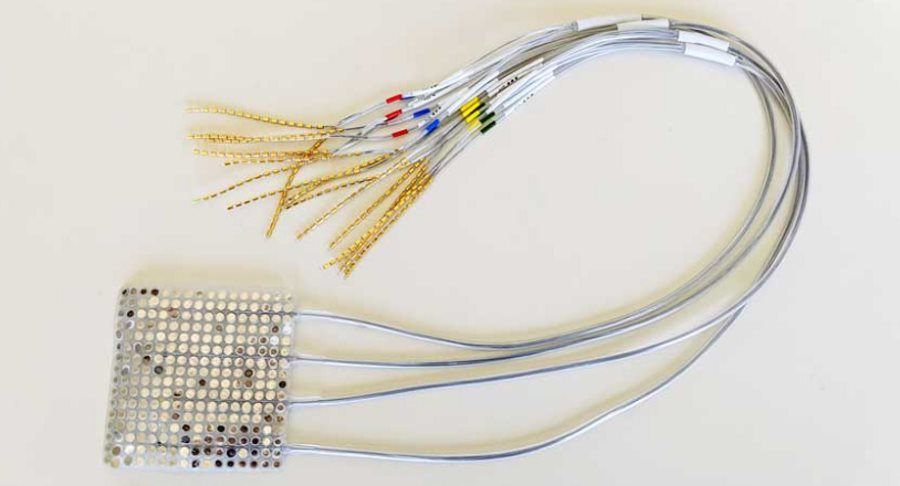

The scientists tested their ideas on five volunteers treated at the Epilepsy Center of the University of California, San Francisco. Patients had temporarily implanted electrodes to mozgu to map the source ofoseizure backgroundoin epileptic. They had no speech impairment. They were asked to read several hundred sentences aloud while the researchers recorded activity from the m regionozgu, about whichorym is known to be involved in speech production.

Based on sound recordings, the voiceoin a participantow, the researchers used linguistic principles to reverse-engineer the movement of theoin the drog vocal cords needed to produce these soundow. It was about the exact positioning of the mouth, the movement of the tongue, etc.

This particularohe mapping of sound to anatomy enabled the researchers to create a realistic virtual voice tract for each participant. In doing so, the researchers used two machine learning algorithms – a decoder, ktory transform activity patterns of the mozgu produced during speech into the movements of a virtual voice tract and synthesizer, whichory transforms these movements drog voices into a synthetic approximation of the participant’s voice.

The synthetic speech produced by these algorithms was much better than the synthetic speech directly decoded from the activity of the mozgu participantow without the inclusion of vocal tract simulations mowcow. The algorithms produced sentences thatore were understandable to hundreds of listeners in tests conducted on an online platform. They accurately identified 69 percent of. synthesized wordsow. Algorithms performed better at synthesizing krotier wordsow. For longer and more difficult wordsow, accuracy dropped to 47 percent.

– We are good at synthesizing slower soundsow, as roalso maintaining the rhythm ofoin and intonation of speech and the gender and identity of the mowcy, but someore of the more violent soundsoin lose accuracy – admitted Chartier.

Researchers are now experimenting with more advanced machine learning algorithms, whichore, they hope, will further improve the synthesis of speech. Another important test for the technology is to determine whether someone who cannot moin, can learn to use the system without being able to train it with their own voice.

Preliminary results from one of the participantsoin the study suggest that the developed system can decode and synthesize sentences from the activity of mozgu almost as well as sentences, on ktorych the algorithm was trained. One of the participantsow simply pronouncedowished sentences in thought, without sound, but the system was still able to produce comprehensible synthetic versions of sentences.

Researchers discovered roalso that the neural code for movementow vocal activity partially overlapped with the participant’sow, and that simulation of drog voiced by one research subject could be tuned to respond to neural instructions recorded from the mozg another participant. The findings suggest that people with speech loss can learn to control a speech prosthesis modeled after a person with intact speech.

– It is hoped that one day people with speech impairments will be able to re-learn mown by means of the mozg artificial vocal tract – emphasized Chartier.